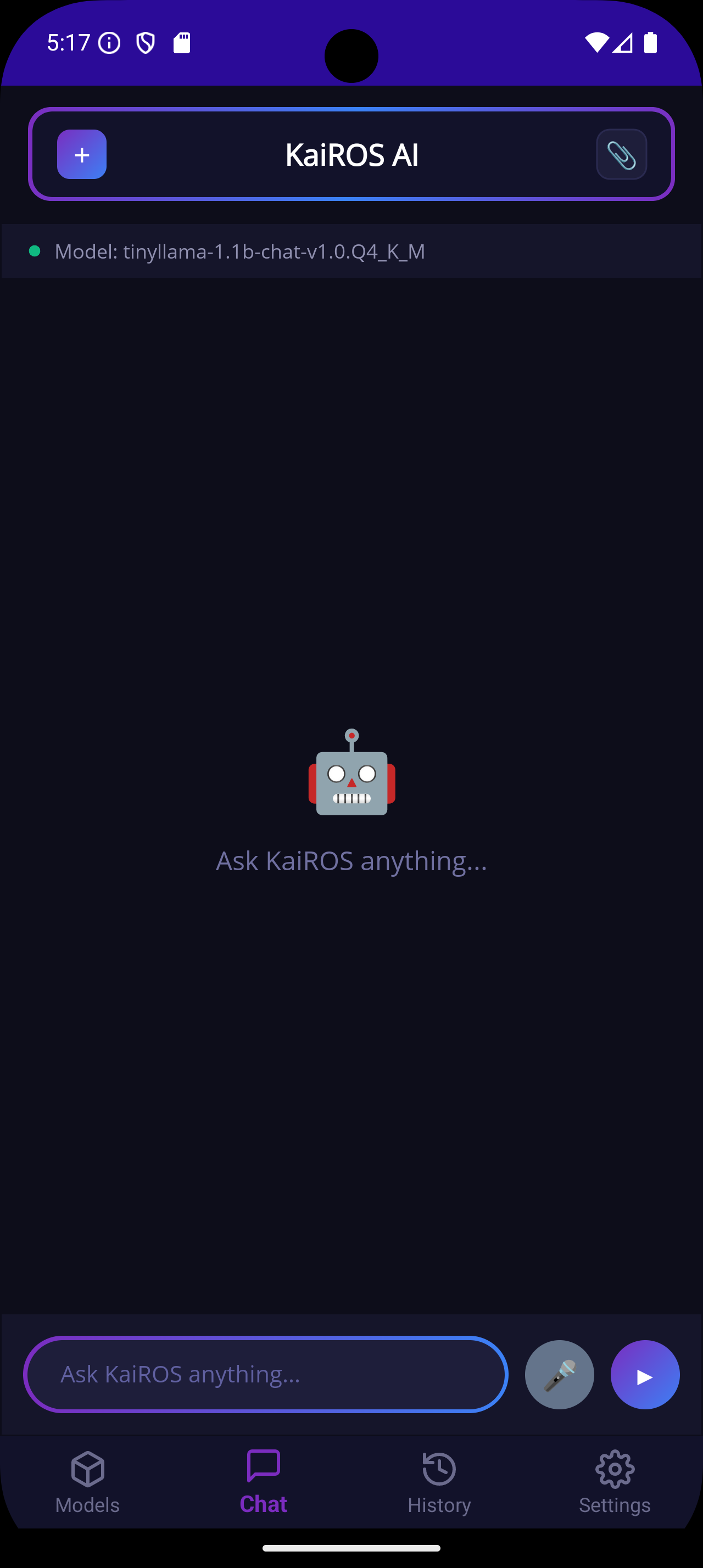

KaiROS AI

An open-source local AI assistant that runs large language models on your device(cpu or gpu) for private, offline conversational AI.

KaiROS AI is a powerful open-source local AI assistant for Windows and Android that enables you to run large language models (LLMs entirely on your own device) without any cloud dependency, prioritizing privacy and offline use. It offers a full chat interface with model management, document retrieval-augmented generation (RAG), streaming responses, GPU acceleration support (CUDA/DirectML/Vulkan), and even a local REST API for developers to integrate locally hosted models into other applications.

Run powerful LLMs right on your Windows PC or Android phone with zero cloud dependency — this privacy-first AI assistant keeps your conversations local whether you're at your desk or on the go.

Key features:

- 🔒 100% local inference on CPU or GPU with CUDA, DirectML, and Vulkan support

- 📄 RAG mode lets you chat with your PDFs, DOCX, and TXT files

- 🔌 Built-in REST API server integrates with VS Code Continue and other tools

- 📱 Cross-platform with full-featured Windows and Android apps

This summary was generated by GitHub Copilot based on the project README.